Triplex Millennium Silver XabrePRO graphics card

Review date: 28 May 2002. Last modified 03-Dec-2011.

Until now, Silicon Integrated Systems (SIS, or "SiS", as they for some reason want their acronym to be rendered) have not been the chipset provider of choice for the performance PC graphics enthusiast.

SIS have produced quite a lot of graphics chipsets, but they've all been cheap chips for entry level systems and boring business boxes. In those tasks, they're generally perfectly fine, for the money; if a video chipset discourages your employees from spending company time shocklancing enemy Havocs, then that's a feature, not a bug. But if you want to run 3D applications, SIS products have always, to four significant digits, sucked.

This is, however, no longer the case. Because now there's the Xabre.

The Xabre chipset is aimed at the same "high entry level" 3D video adapter market as Nvidia's GeForce4 MX. And it's priced about the same, too. A GeForce4 MX440 card gives you ample 3D power for pretty much any current game on pretty much any current monitor; here in Australia, Aus PC Market will deliver a Leadtek Winfast A170 DDR MX 440 board to you for $AU242.

(NOTE: They don't sell them any more. Get with the program!)

But Triplex's new Millennium Silver XabrePRO card costs, as I write this, exactly the same amount. And, if you look down the feature charts, it seems to beat the A170 handily.

For a start, it's faster. Which is something I'll get to in more detail in a moment.

Also, a cheap MX 440 card like the A170 isn't likely to have twin monitor connectors. So Nvidia's drivers may let you access "nView" multi-monitor features, but all you can use them with is the card's TV output, which isn't going to let you do proper dual screen computing.

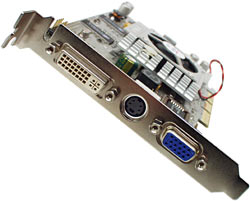

The Triplex Xabre card has a standard 14 pin D-sub VGA connector, and a Y/C (S-Video) TV output, and a DVI-I connector. It can run two outputs at once, in mirror or true multi-monitor mode. You also get a DVI-to-RGB adapter, so you can easily hook up two regular VGA-connector monitors.

The Xabre also has a full DirectX8.1 feature set, which means it can handle all of the pretty-features used by the newest and shiniest Direct3D games. GeForce4 Titanium cards have full DX8.1, but GeForce4 MX cards don't; they don't even have a full GeForce3 feature set.

In the real world, this isn't a very big deal; everything still runs on a GeForce4 MX, and it generally runs very quickly indeed; you might have noticed that all of those people with GeForce2s haven't suddenly noticed they're unable to play new games. It's going to be some time before DX8.1 compatibility is a must-have feature. But hey, it doesn't hurt to have it, for the same price.

The Xabre is also alleged to embrace compelling relationships and grow ubiquitous synergies between the paradigm frontiers of the blah blah blah, with all sorts of trademarked "technologies", which seem to be broadly similar to those claimed for every other new graphics chip since the invention of dirt.

Frankly, marketing people have so comprehensively poisoned this particular well that I no longer even attempt to figure out which of the new "technologies" mean a darn thing. They're like promises from politicians on the campaign trail; life's too short for it to be worthwhile paying attention.

To be fair, I don't think any graphics chip company has ever described their product as a "mysterious knight" before. But that doesn't mellow me out much.

While we're on the subject of apparently cool stuff that doesn't actually matter very much, the Xabre also supports AGP 8X, the latest doubling of Accelerated Graphics Port speed. AGP 8X brings AGP theoretical bandwidth up to match that of PC2100 DDR memory.

Not that anything's likely to be able to use most of that bandwidth to send data to the graphics card, mind you. Certainly not texture data from main memory, which often has to serve multiple simultaneous requests from various subsystems, and which has large overheads even when it's only got one job to do.

AGP 8X may be the fastest AGP mode yet, but it's still much slower than on-card video memory, so you still need enough memory on the card for all of the textures you want to use.

Besides, practically nobody on the planet has an AGP 8X capable motherboard yet, as I write this.

The "Xabre 400" chipset, also known as the Xabre Pro, is the first to be released; there are two slower models and one faster one in the pipeline. I'm pretty sure that this Triplex card is the only retail Xabre card in existence so far.

It's a good looking thing, with Triplex's distinctive silver finish (also used on their Nvidia-chipset cards, including the GeForce4 Ti4600 one I review here).

The main chip cooler on the Triplex card is quite impressive, but you don't get any heat sinks on the RAM chips. That's OK, though. RAM-sinks on video cards are like spoilers on passenger cars; buy the more expensive, faster model and you get the extra frill thrown in. It doesn't do much of anything, though.

The eight memory chips (there are another four on the back of the card) are all Etron Technology EM658160TS-3.3s. They're slightly faster than any of the EM658160 variants listed on the manufacturers' page here; the -3.3 version is the 3.3 nanosecond incarnation of this 64 megabit chip, and so it ought to have a ceiling specified speed of 300MHz.

So you've got a thoroughly acceptable 64 megabytes of memory (eight times 64 megabits, eight bits to the byte), which should be happy running at an effective 600MHz, after taking DDR doubling into account. Or a bit more, with luck, a following wind, and perhaps also some extra cooling.

This memory isn't running at 300/600MHz on this card, though; the Xabre Pro has 250MHz core and RAM (before DDR doubling) clock speeds, by default.

Hi-ho for some overclocking, then.

You can do that with the standard Triplex drivers, which seem to be the only ones available for the Xabre at the moment. Triplex themselves don't have any Xabre drivers for download; heck, you can't even download drivers from the Xabre site itself yet. The driver pages are "under construction". So don't lose the driver CD that comes with the card.

Drivers for new video chipsets are, typically, crummy. Inadequate development, inadequate testing, personality defects and bugs galore. Nvidia's drivers are a big reason to buy cards that use their chipsets; there's exactly one driver package for each Windows variety, and it covers every Nvidia chipset since the original TNT, and the drivers inside that package have been thoroughly tweaked and tested over the course of years of updates.

Perfect, Nvidia's drivers aren't. About as good as you can get, they are.

SIS seem to have done an OK job with the core functions of the Xabre Windows drivers (they're not likely to bother with Linux drivers; third party ones are a work in progress). But I didn't check a bunch of games on different Windows flavours to be sure; I just ran a few tests on a WinXP Pro machine. For all I know, the card catches fire if you run Quake 2 on WinME. But the drivers smelled all right to me.

The icing that Triplex have put on the SIS code core, though, is... quirky.

As a representative sample of the Triplex visual style, check out this overclocking control panel:

Yee-ow.

If this pub-carpet school of interface design doesn't turn your crank, rest assured that various driver features can also be accessed via what look like cheesy software MP3 player interfaces, instead:

This is a family Web site, so I'll refrain from commenting further on this.

The overclocking controls let you wind up the core and RAM clock speeds quite a bit, but the card, she does not want to know. The results of my overclocking experiments were somewhat inconsistent - a given speed would hang the machine when I tried it one time, but work when I restarted and tried again. But none of the results were particularly impressive.

RAM chips that're rated for a speed well above the speed at which a given card runs them are all very well, but there are limiting factors besides the RAM itself. Those factors are in full effect on the Triplex Xabre board, as far as I can see.

Setting the RAM speed slider to 300MHz instantly, and not very surprisingly, borked the computer.

275MHz mangled the display, but at least I could cancel out of it. 265MHz, the first time I tried it, caused more borkitude, with no escape. Then, after a restart, it worked OK.

Raising the core speed to just 260MHz took me on a trip to the magical land of the BSOD when I tried to do a 3D test, but 250/265 was OK, although it didn't make anything more than 1% faster.

That was the best the Triplex card could do. Perhaps this was something to do with the drivers; they did worrying things like setting the clock sliders all the way to the left, sometimes, when I went to the "DisplaySetting" tab for the first time after a reboot. Clicking Cancel and then going back to DisplaySetting solved that problem every time it happened, putting the sliders back in the right place. But stuff like this didn't inspire confidence.

Triplex provide a couple of optional extras along with the drivers themselves - a pop-up menu thing that gives quick access to Display Properties and other features and is therefore somewhat useful, and a multi-function bar thing whose best feature is that you don't have to install it. That's it for the software bundle; no cheapo DVD player software, no colour calibration thingummy, no bundled game you don't want to play.

In contrast, the software bundle that comes with the Leadtek A170 includes all of the above.

Big deal.

More significantly, the A170's 64Mb of DDR memory is made up of Samsung K4D263238M-QC40 chips, which are four nanosecond chips, and should be good for 250 un-doubled megahertz, or 500MHz after DDR's worked its magic.

The MX 440 only runs at a core speed of 270MHz and a RAM speed of 200MHz, by default. The general consensus seems to be that no core overclock worth bothering with is likely to be possible, but RAM speeds of 225 to 250 pre-doubling megahertz are routinely attainable. That'll net you a worthwhile speed increase, if you're running a high enough resolution and/or anti-aliasing mode.

Probably not, however, enough of an increase to make up for the Xabre's natural speed advantage over the MX 440. Not unless you've got a pretty big monitor, anyway.

I tried out both the Millennium Silver XabrePRO and the Leadtek A170 on what now, alarmingly enough, qualifies as a medium performance PC. It's a Thunderbird Athlon machine, running at 1477.5MHz (according to WCPUID, anyway) on an Asus A7A266 motherboard, with 512Mb of humble PC133 RAM. Roughly Athlon XP 1600+ CPU performance, let down a bit by the RAM, in other words. Tests were performed under Windows XP Professional.

Mad Onion's 3DMark2001 SE is a thorough and quite realistic DirectX 8 benchmark. The Xabre can't currently handle the Advanced Pixel Shader test, but SIS say that's Mad Onion's fault, not theirs.

With the defective test disabled, the Xabre at stock speed beat the MX 440 at stock speed by about 33% in 1024 by 768, using 32 bit colour and compressed textures. It won by about the same amount when both cards had 2X full screen anti-aliasing turned on.

In the same resolution with 4X FSAA, neither card was performing particularly well, but the Xabre still won by more than 11%. That gap would be pretty much annihilated by a reasonable RAM overclock on the MX 440 card.

In 1600 by 1200 with no FSAA, the Xabre won by less than 10%. Then again, it managed 1600 by 1200 with 2X FSAA at a respectable 66% of its no-FSAA speed. The MX 440 is less memory efficient, and couldn't even attempt that test.

For those who can't comprehend benchmark numbers unless they're presented in a cheesy Excel graph, here one is.

I wanted to do some OpenGL tests next, but was defeated by the fact that the drivers that come with the retail Xabre card don't seem to provide any way to turn off vertical sync (vsync), which tells the video card to wait for a new screen refresh before displaying another frame.

With vsync turned on you won't get "tearing" caused by the screen updating faster than it refreshes, but you also end up with a hard cap on your frame rate that's equal to the refresh rate. Try as I might, I couldn't turn vsync off - turning it off elsewhere (in, for instance, the Quake III Arena config file), didn't work.

So much for that, then.

As quirks go, though, the locked-on vsync paled into insignificance compared with the noise.

The Xabre card makes a noise when it's in 3D mode.

It's usually a vague, high pitched, white-noise-y rasp. Sometimes, as in the 3DMark2001SE Point Sprites test, it's distinctly different - more of a whistling noise. I've never heard anything like it from a video card before. It's bizarre. It's not really annoyingly loud, but you'd be able to hear it without taking the side off your case.

This is not an oddity of the one card I got for review, either. I procured another one. Same noise. A friend tested a third. Same noise.

Perhaps this is just the sound of a Triplex Xabre board; a couple of readers have suggested that it's probably the board's power supply components doing it. A mere almost-four-years after this review went up, another reader told me that he bets it's the DC to DC converter, and favoured me with the information that he used to deal with noisy geometry correction chokes in Philips G11 by cracking 'em with a hammer.

Usually, solid state devices making a clearly audible noise are in the process of being electronically beaten to death. By misdesigning a power supply, I once managed to make a solid state battery charger tick like a clock until it expired.

(It did so in such a thorough way that the note later attached to the charger by a thwarted repair technician read "murdered by owner".)

But looping demos on the Triplex cards didn't seem to hurt them, so I think we can rule out over-voltage.

Overall

Frankly, I wouldn't buy a Xabre card right now. The thing seems to be decently fast, but it has sufficient points of weirdness that I think it'd be a good idea to hang about a bit and see whether any of them turn out to be symptomatic of serious problems.

If someone gave me a Triplex Xabre as a present, though, I wouldn't go straight out and try to swap it for a GeForce4 MX. It doesn't seem terribly likely that it'll go up in a puff of smoke, none of its driver quirks are crippling (well, unless disabling OpenGL vsync is essential for your continued happiness), and the little melon-picker is a dual-monitor board with respectable 3D performance.

So wait and see on this one, I say.

If you've got the price of a Xabre board burning a hole in your pocket and you're currently using a thoroughly inadequate graphics card, then play it safe and get a GeForce4 MX instead. If you can stand to wait for about a month, though, do. By then, enough people should have prodded the Xabre around that Usenet and review directories should give you a definite yea or nay on the thing.

Right now, though, it's a Video Card Of Mystery. And I'd rather not pay for one of those, if it's all the same to you.

Triplex's page for the XabrePRO